Now that we have a basic understanding of the underlying background of building a NN, lets talk a little more in detail about the GAN.

Generative Adversarial Network's (GAN's)¶

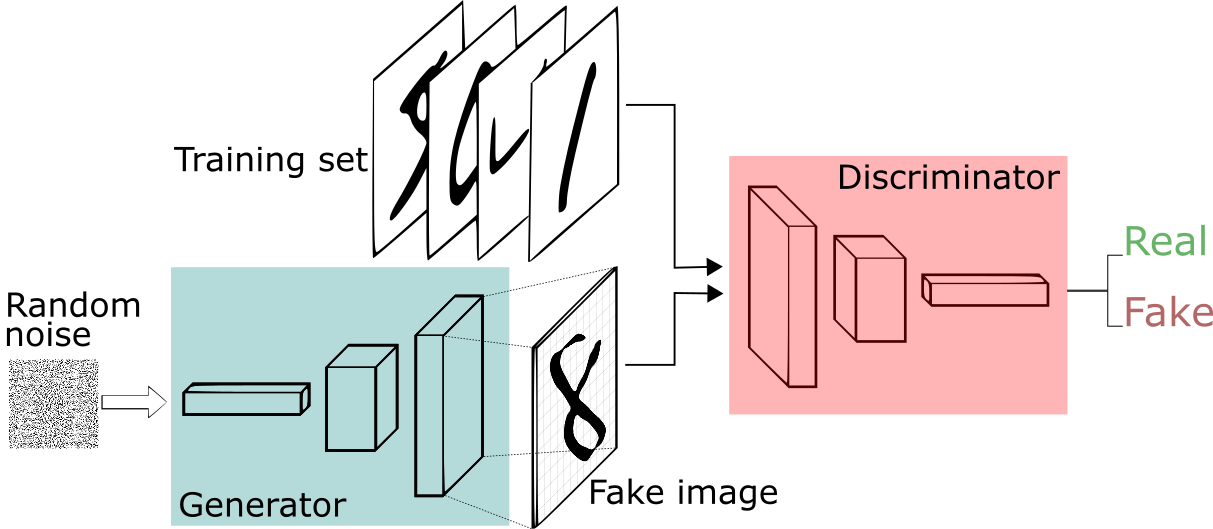

GAN's are usually made up on two neural networks that compete against each other in order to grow and develop a set of new data. This is commonly done for images and some examples can be found here. Thinking about a GAN in a more realistic way it can be visualized as a game of Cop's and Robber's. Where the cops try to determine if something is a forgery or not and the Robbers try to create a forgary that will trick the cops.

The parts of a GAN¶

For any GAN they usually break up into two seperate parts, the generator, and the discriminator. Where the generator is the Robber, and the discriminator is the cops (from our previous example). The generator usually take in some random noise and transforms it into a representation of the expected data. However, at first it will not be any good. Then the output of the generator is passed to the discriminator and decided if it is real data or generated data.

One thing to note is that this is something of a symbiotic realtionship, as the discriminator grows, so does the generator. This is why they need to start off with similar levels of accuracy. If they were extreamly disproportional the other one would not be able to grow. I.E if the generator develops really good forges that always tricks the discriminator, then they generator would not need to do any better.

Discriminator¶

This is usually a classifier that taken in an input and determins which class it falls into. For simplicity we can have a Neural network that takes in an image, and ouputs a single value, 1 for yes and 0 for no.

Generator¶

This has the same idea behind an autoencoder, where it tries to find the latent feature space of the data and given some input, transform it into something similar in the feature space. This generator usually takes in a noise vector (randomly initalized input values) as well as a class that the vector is suppose to represent, and the ouput is a representation of each individual pixel for the new image.

Binary Cross Entropy (BCE) Cost Function:¶

This is the most common cost function that is used with GAN's, and more specifically binary (two) classifiers, 1 for real, 0 for fake. The BCE function is

$$f(\theta) = -\frac{1}{m}\sum_{i=1}^m Q(\theta) + Z(\theta)$$

$$Q(\theta) = y^i * log(pred(x^i,\theta)) $$

$$Z(\theta) = (1-y^i) * log(1 - pred(x^i,\theta))$$

Where to break it down is represented as:

$$\frac{1}{m} \sum_{i=1}^m: \text{We are looking at the average of all values in the batch}$$

$$y^i * log(P(x^i,x)): \text{the true label compared to the prediction when the label is 1}$$

$$(1-y^i) * log(1 - P(x^i,x)): \text{the true label compared to the prediction when the label is 0}$$

The negitive in front is used to ensure that the whole cost is greater or equal to zero

Problems with BCE Cost Function:¶

While this is commonly used, it is only the first step for GAN's to used as an introduction, however it is not a one size fits all cost function. This cost function has issues associated with it that I believe should be pointed out before moving forward. The generator is interested in maximizing this cost, while the discriminator wants to minimize it; this is to say the generator wants to increase $Q(\theta)$ while the discriminator wants to minimize $Z(\theta)$. Thus the overall goal is for the GAN to learn the overall feature space of the input and generate data in that feature space. The learned feature space gets should get closer to the actual feature space over time. However the Discriminator usually outputs a single value (0, 1) while the Generator works on the complex task of figuring out the feature space.

Usually when training we see that the discriminator usually increases in accuracy very quickly and then as the generator gets good, the discriminators slows down in accuracy growth because it gets harder to differentiate betweent the two, thus causing a vanishing gradient problem. There are other different types od Loss functions that can be used for GAN's and it is reccomended to go over them to figure out which is the best to use!

To view the code for a simplistic GAN check out the following code here